Scientific research today is more data-intensive than ever before, with fields like genomics, bioinformatics, machine learning, and deep learning requiring vast computational resources to process complex datasets and run simulations. Enter cloud GPUs—a transformative technology that provides researchers with scalable, powerful high-performance computing (HPC) capabilities, enabling them to tackle these challenges with greater speed and efficiency.

Gone are the days of relying solely on expensive, in-house hardware or waiting for long computational cycles to finish. With cloud GPUs, research teams can now access on-demand GPU acceleration from providers like AWS, Google Cloud, and Microsoft Azure, allowing them to quickly scale up their resources, optimize workflows, and significantly reduce project timelines—all at a fraction of the cost of traditional systems.

This guide offers a comprehensive look at how cloud GPUs are revolutionizing scientific research. You’ll learn how they can enhance computational power, streamline workflows, and provide the flexibility needed to adapt to various project demands. Whether you’re a genomics researcher looking to sequence DNA faster or a machine learning expert aiming to train models more efficiently, cloud GPUs present an indispensable solution.

Let’s dive into how you can leverage cloud GPUs to accelerate your research while maintaining cost-efficiency and scalability—key factors for success in today’s fast-paced scientific environment.

Table of Contents

Why Do Cloud GPUs Matter in Scientific Research?

A Cloud GPU is a Graphical Processing Unit hosted in the cloud by providers like AWS, Google Cloud, and Microsoft Azure. Unlike traditional GPUs housed in local systems, Cloud GPUs offer remote access to high-performance computing (HPC) resources, enabling scientists and researchers to handle data-intensive tasks without the need for on-premise hardware.

Cloud GPUs excel in parallel processing, making them perfect for complex scientific research. They are designed to work efficiently with tools like Python, TensorFlow, PyTorch, and MATLAB, making integration into existing research workflows seamless. The key benefits? Scalability, cost-efficiency, and ease of integration—all while boosting computational power.

Key Benefits of Cloud GPUs

- Scalability: You can scale up or down based on the project’s demands. Need more GPUs for a large-scale genomics project? No problem. Cloud platforms offer elastic GPU scaling to suit your needs at any time.

- Cost-Efficiency: Forget buying expensive hardware. With Cloud GPUs, you only pay for what you use, thanks to pay-as-you-go models or reserved instances, making it a budget-friendly option for long-term research.

- Ease of Integration: No need to overhaul your existing setup. Cloud GPUs work smoothly with popular scientific tools like Python, TensorFlow, and MATLAB, allowing you to continue working with your preferred software.

Why Cloud GPUs Matter in Scientific Research

Cloud GPUs are revolutionizing how researchers tackle data-heavy computations. Here’s why they’re crucial across various fields:

- Genomics Research: The amount of data in DNA sequencing is immense. Cloud GPUs significantly reduce processing time, allowing researchers to analyze large datasets much faster. With GPU acceleration, you can process genomes 5x faster than traditional CPU methods.

- Molecular Simulations: Whether you’re working in chemistry or biophysics, Cloud GPUs can handle the massive datasets required for molecular simulations. This leads to faster discoveries in areas like drug development and material science.

- Climate Modeling: Predicting long-term climate changes requires huge datasets and complex calculations. Cloud GPUs speed up these climate simulations, providing faster results and more accurate predictions.

- Machine Learning: Training machine learning models on cloud-based GPUs accelerates research in AI, optimizing deep learning algorithms. Cloud GPUs allow researchers to process vast amounts of data simultaneously, reducing training time and improving model accuracy.

A new genomics pipeline developed by Baylor College of Medicine integrates AWS cloud computing, local HPC clusters, and supercomputing to enhance the efficiency and scalability of genome sequencing projects.

This hybrid approach enables rapid analysis of large genome cohorts, significantly reducing computational costs and time. For instance, it processed over 5,000 genome samples in under six weeks, demonstrating its potential for future large-scale sequencing efforts. For more details, you can view the full report here.

Benefits of Cloud GPUs vs Traditional High-Performance Computing (HPC)

Scalability and Flexibility

One of the standout advantages of Cloud GPUs is their scalability. Researchers can scale GPU resources up or down based on the demands of their project, ensuring they never overpay for unused capacity. Unlike traditional HPC clusters, which require upfront investment and often sit idle during off-peak times, Cloud GPUs allow you to adjust resources as needed.

For instance, during peak workloads in genomics research or molecular simulations, researchers can leverage elastic GPU scaling to access hundreds of NVIDIA GPUs in real time. This on-demand scalability helps optimize both performance and costs, particularly during critical phases of a research project.

Cloud GPUs are designed for elastic scaling, allowing researchers to scale up or down based on the specific needs of their projects. The Scalability Line Graph visually demonstrates how Cloud GPUs can easily scale to handle peak workloads, while Traditional HPC systems remain largely static, with limited capacity to scale dynamically.

The graph shows that Cloud GPUs can quickly reach full capacity and adjust as needed, while Traditional HPC requires manual adjustments and additional hardware, limiting its scalability. This emphasizes the flexibility and efficiency of cloud-based solutions for fast-growing or variable-demand projects.

Cost-Efficiency

ost is a major concern for research institutions. With Cloud GPUs, the pay-as-you-go pricing model offers a more flexible and affordable alternative to purchasing physical GPU clusters. Traditional HPC setups require a significant upfront investment in hardware, which may not be fully utilized throughout the project’s lifecycle.

In contrast, cloud GPUs enable researchers to access high-performance resources without the burden of maintenance or capital expenditure. Research teams that transitioned to AWS for GPU-accelerated computing reported substantial savings.

Data from academic institutions shows that teams utilizing pay-as-you-go pricing saved up to 40% on compute costs, making Cloud GPUs a cost-effective solution for grant-funded projects.

Cloud GPUs offer significant cost savings, particularly for institutions with fluctuating needs. The Cost Comparison Bar Chart highlights how Cloud GPU costs remain consistent and relatively low over time, following a pay-as-you-go model, whereas Traditional HPC requires significant upfront investment and has higher ongoing maintenance costs.

The chart shows that over six months, Cloud GPUs have steadily lower costs, while Traditional HPC involves a much larger initial outlay and higher expenses for hardware maintenance. This illustrates how Cloud GPUs provide a more flexible and cost-effective solution for institutions seeking to reduce their capital expenditure.

Performance and Speed

When it comes to performance, NVIDIA GPUs such as the Tesla V100 and A100, available through cloud platforms like AWS and Google Cloud, deliver far superior results compared to traditional CPUs. These GPUs are specifically designed for parallel computing, enabling them to handle multiple calculations simultaneously, a key feature for tasks like climate modeling and deep learning.

Benchmarks consistently show that Cloud GPUs outperform CPUs in both speed and efficiency. For instance, running climate simulations on a Tesla V100 results in processing times that are up to 3x faster than on local HPC clusters. In deep learning models, the A100 GPU demonstrates GPU acceleration that significantly reduces training time, making it ideal for cutting-edge research.

The GPU Performance Bar Chart compares the NVIDIA A100 and Tesla V100 across different tasks, such as AI model training, genomic data processing, and molecular simulations. The chart highlights the superior performance of the NVIDIA A100, which is up to 20x faster than the Tesla V100 in certain tasks.

The visual shows how using NVIDIA A100 reduces processing times for large datasets, making it the ideal choice for high-performance applications in fields like genomics and climate modeling. This demonstrates the clear advantages of newer GPU models in handling complex computations at faster speeds.

Energy Efficiency

Energy consumption is a major factor when comparing Cloud GPUs and Traditional HPC systems. Cloud providers operate highly optimized data centers that significantly reduce energy usage compared to traditional HPC, which requires substantial energy for operation and cooling.

The Energy Efficiency Pie Chart visually demonstrates that Cloud GPUs use approximately 30% of the energy compared to Traditional HPC, which consumes 70%. This reflects the large-scale energy savings possible when moving to cloud-based solutions.

Key Differences between Cloud GPUs and Traditional HPC

| Factor | Cloud GPUs | Traditional High-Performance Computing (HPC) |

|---|---|---|

| Scalability | Highly scalable: Resources can be scaled up or down based on project needs. Pay only for what you use. | Limited scalability: Requires physical hardware expansion, which can be costly and time-consuming. |

| Cost | Cost-efficient: Offers pay-as-you-go models and reserved instances. No upfront hardware investment is needed. | High upfront costs: Requires significant capital investment in physical infrastructure and ongoing maintenance costs. |

| Performance | High performance: Access to cutting-edge GPUs like NVIDIA A100 and V100, optimized for parallel processing and AI-driven research. | Stable performance: Performance is fixed, based on the installed hardware. Cannot be upgraded easily without replacing components. |

| Maintenance | Minimal maintenance: Managed by cloud service providers like AWS, Google Cloud, and Azure. Providers handle updates and hardware maintenance. | High maintenance: Requires in-house IT teams to manage hardware updates, repairs, and cooling systems. |

| Access to Latest Technology | Immediate access: Cloud providers offer instant access to the latest GPUs and updates. | Delayed access: Upgrading to new technologies requires purchasing new hardware and can be slow to implement. |

| Deployment Speed | Fast deployment: Can deploy and access GPU instances almost instantly with pre-configured environments for research tools like TensorFlow, PyTorch, and MATLAB. | Slow deployment: Setting up HPC infrastructure can take weeks or months, including hardware installation, configuration, and testing. |

| Elasticity | Elastic resource allocation: Resources can be allocated and deallocated dynamically, allowing more flexibility during peak workloads. | Fixed resources: Resource allocation is static, making it difficult to adjust to fluctuating demands without buying new hardware. |

| Energy Efficiency | Energy-efficient: Cloud providers typically use optimized, large-scale data centers that improve energy efficiency. | Energy-intensive: HPC systems require significant energy for operation and cooling, increasing operating costs. |

| Security and Compliance | Built-in security features: Providers offer advanced security protocols (e.g., encryption, multi-factor authentication) and compliance with regulations like HIPAA and GDPR. | Custom security setups: Requires in-house teams to implement security protocols. Compliance with regulations must be manually ensured. |

| Flexibility in Software | Flexible software support: Easily integrates with a variety of frameworks (e.g., TensorFlow, PyTorch, MATLAB, R), supporting a wide range of scientific workflows. | Limited flexibility: HPC systems can support a variety of software, but updates or changes often require extensive reconfiguration. |

| Geographical Accessibility | Globally accessible: Researchers can access Cloud GPUs from anywhere in the world, making collaboration easier. | Limited to physical location: Access is restricted to the location where the HPC hardware is physically installed. Remote access can be set up but is more complex. |

| Data Transfer and Storage | Efficient data transfer: Optimized for large data transfer across regions. Cloud storage options ensure flexibility in data management. | Challenging data transfer: Data transfer between different HPC locations or external networks can be slower and more complex. |

The given table provides a quick overview of the key differences between Cloud GPUs and Traditional HPC systems, covering aspects like scalability, cost, maintenance, and performance.

The table highlights how Cloud GPUs offer elastic scaling, cost-efficiency, and automatic updates, while Traditional HPC requires large upfront investments and is slower to scale.

Top Cloud GPU Providers for Scientific Research: AWS, Google Cloud, and Azure

AWS (Amazon Web Services)

AWS offers robust Cloud GPU solutions tailored for scientific research, with options like Elastic GPU and EC2 Instances powered by NVIDIA Tesla GPUs (such as the Tesla V100). These GPUs provide exceptional performance for GPU-accelerated computing tasks, making them ideal for research involving machine learning, genomics, and molecular simulations.

- Pricing Models: AWS offers flexible pricing with pay-as-you-go options, allowing researchers to only pay for the GPU resources they use. For long-term projects, reserved instances offer significant cost savings, providing dedicated access to GPU resources over extended periods.

- Academic Access: Many researchers take advantage of AWS’s grant programs, such as the AWS Cloud Credits for Research, to access cloud GPUs at reduced costs.

These grants are designed to help academic institutions leverage AWS’s powerful GPU infrastructure for cutting-edge scientific projects.

Google Cloud

Google Cloud stands out for its AI-focused infrastructure, which includes TensorFlow integration and TPUs (Tensor Processing Units) designed for machine learning and deep learning workloads. This makes Google Cloud GPUs a strong option for projects that require AI-based data processing, such as in genomics and bioinformatics.

With seamless integration of TensorFlow, Google Cloud makes it easy for researchers to accelerate model training and processing, providing scalability and flexibility for large datasets.

The Google Cloud Case Study of Broad Institute mentioned that the Broad Institute’s move to Google Cloud solved the problem of handling vast genomic datasets and processing delays caused by limited in-house infrastructure. The shift improved genome sequencing speed by 400%, reducing the time to sequence a human genome from hours to just 12 minutes.

It also enhanced scalability, allowing researchers to dynamically adjust to spikes in demand and analyze up to 12 terabytes of data daily. The cloud platform offered better data security, ensuring privacy and control over sensitive genomic data while enabling seamless data sharing among global collaborators.

Microsoft Azure

Microsoft Azure offers a comprehensive set of GPU offerings, including NVIDIA Tesla V100 and A100 GPUs, specifically designed for large-scale simulations and HPC environments. Azure’s strength lies in its focus on data security and compliance, making it a preferred choice for healthcare and genomic research.

- Data Security: With robust security frameworks, Azure ensures compliance with regulations like HIPAA and GDPR, critical for handling sensitive research data in fields like genomics and healthcare.

- Azure HPC: For large-scale scientific simulations, Azure HPC provides cost-effective GPU clusters that deliver powerful processing capabilities for tasks such as climate modeling and drug discovery.

Real-World Applications of Cloud GPUs in Scientific Fields

Cloud GPUs are transforming various scientific fields by providing the computational power necessary to handle large-scale data processing and simulations. From genomics to climate modeling and AI-driven research, cloud GPUs enable researchers to achieve breakthroughs faster and more efficiently than traditional methods.

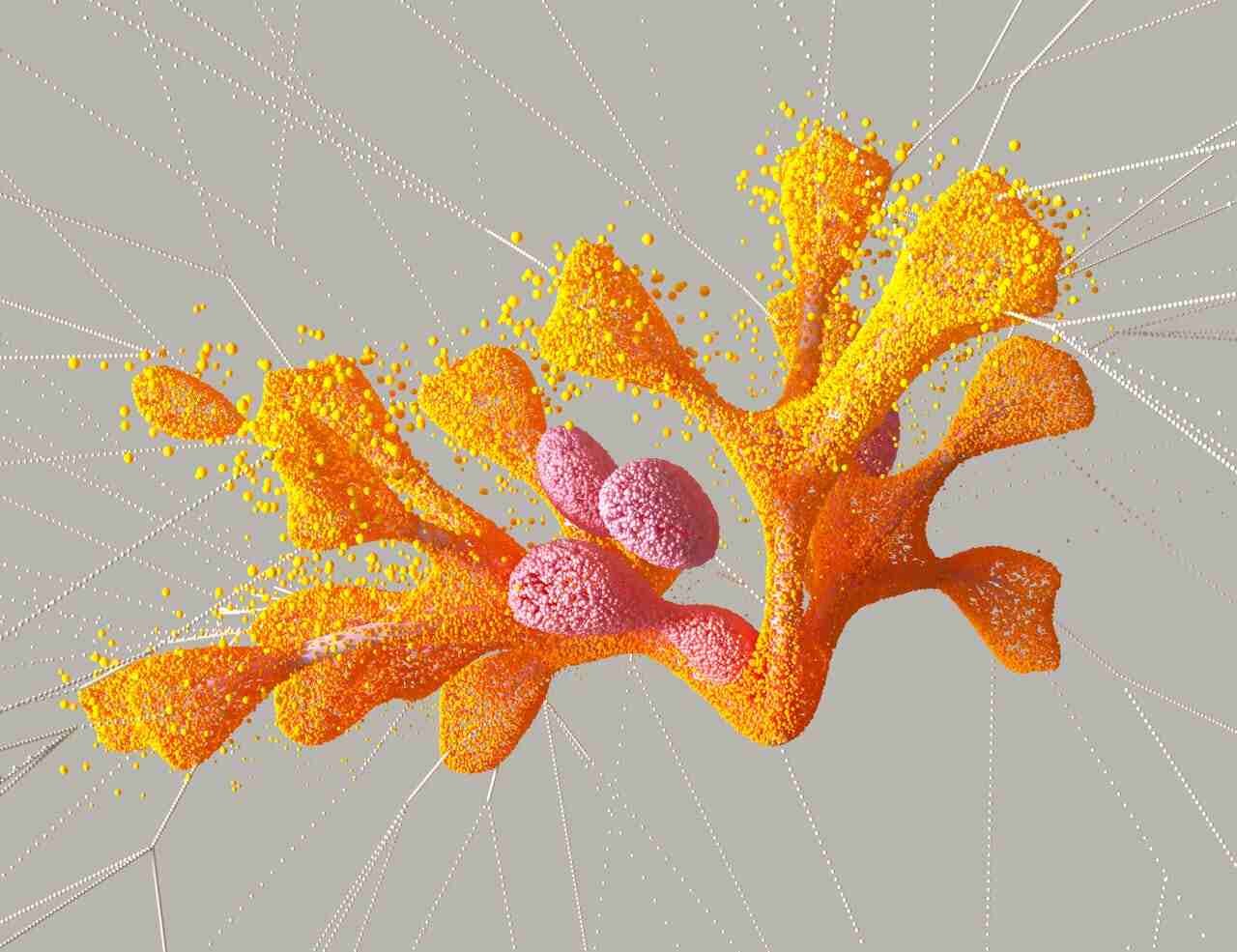

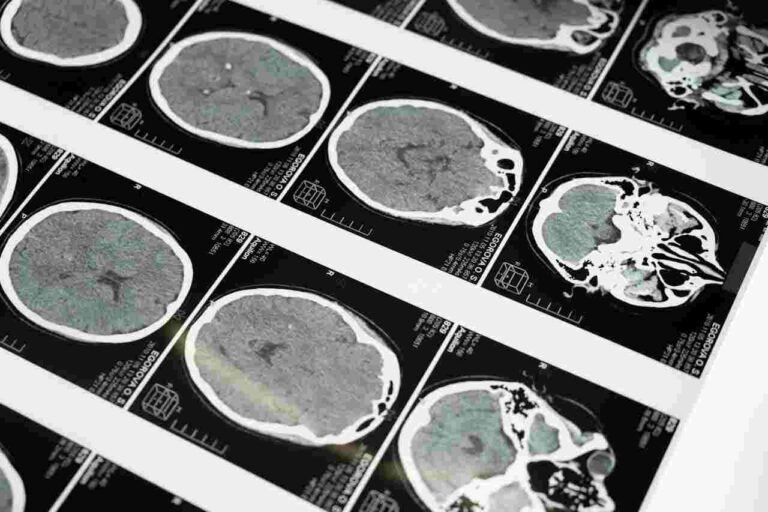

How Do Cloud GPUs Speed Up DNA Sequencing in Genomics Research?

In genomics research, the sheer volume of data generated by DNA sequencing can be overwhelming. Cloud GPUs help accelerate tasks like sequence alignment, variant discovery, and large-scale data analysis, requiring attention to safe temperature management to sustain consistent processing

Researchers using cloud GPUs for next-generation sequencing (NGS) can process vast genomic datasets faster, streamlining critical tasks like genome assembly, variant calling, and haplotype phasing. These GPU-accelerated processes enhance the analysis of single-cell sequencing and whole-genome sequencing (WGS), leading to faster breakthroughs in personalized medicine and population genomics.

BioinformaticsTools like GATK (Genome Analysis Toolkit) and BLAST (Basic Local Alignment Search Tool) benefit significantly from GPU acceleration. GATK, often used for analyzing high-throughput sequencing data, takes advantage of parallel processing offered by GPUs, leading to faster genome alignment and variant discovery.

By leveraging GPU-powered cloud resources, researchers have reduced DNA sequence analysis time by over 40%, allowing for faster breakthroughs in personalized medicine and genetic research.

These GPU-driven enhancements are crucial for large-scale research, such as genome-wide association studies (GWAS), which involve analyzing the genomes of thousands of individuals.

Climate Science and Environmental Modeling

Predicting climate patterns and environmental changes requires processing vast datasets and running intricate simulations. Cloud GPUs are revolutionizing climate science by enabling scientists to model climate change scenarios and extreme weather patterns much faster than traditional HPC systems

For instance, In a case study published by Amazon on Climate X, a climate risk analytics company leverages AWS for its computational and storage needs. By utilizing AWS’s scalable cloud infrastructure, Climate X collects and processes vast amounts of climate data—amounting to petabytes—enabling precise climate risk projections.

This infrastructure allows the company to analyze trillions of data points, improving the accuracy of its risk assessments and providing clients with actionable insights into the potential impacts of climate change on their assets and operations.

AWS’s flexibility and power are critical in supporting Climate X’s mission to drive climate resilience through advanced data analytics. This capability aids in understanding the impact of global warming and in developing mitigation strategies.

How Do Cloud GPUs Accelerate AI and Machine Learning in Scientific Research?

Cloud GPUs are pivotal in scientific research using AI and machine learning. AI-driven research is advancing rapidly, especially in areas like drug discovery and protein folding simulations.

Cloud GPUs expedite deep learning and computational biology models, enabling faster and more automated drug discovery by analyzing vast molecular datasets to identify potential drug candidates. These models are also crucial for complex tasks such as accurate prediction of biomolecular interactions, protein folding, and small molecule screening, all of which accelerate breakthroughs in biopharmaceutical research.

Cloud GPUs play a critical role in accelerating these AI and machine learning projects by providing the necessary computational power for training models and processing massive datasets.

Researchers rely on frameworks like TensorFlow and PyTorch to develop deep learning models for tasks such as drug molecule prediction or analyzing complex biological data.

DeepMind’s AlphaFold, a significant advancement in protein folding prediction, used cloud GPUs to simulate protein structures, solving one of biology’s greatest challenges.

These frameworks seamlessly integrate with cloud platforms like Google Cloud and AWS, allowing researchers to use deep learning frameworks, such as TensorFlow and PyTorch, providing scalable GPU resources to power AI-driven research to scale up model training without the need for physical infrastructure.

With GPU acceleration, AI research has been able to reduce training times by up to 60%, allowing for faster deployment of new models in fields like healthcare and biotechnology.

How to Set Up and Optimize Cloud GPUs for Scientific Research?

Step-by-Step Guide to Cloud GPU Setup

Setting up Cloud GPU instances on platforms like AWS, Google Cloud, or Azure can be a straightforward process, but it’s essential to configure them correctly for your specific research tasks, whether it’s for machine learning or data processing.

- Choose a Cloud Platform: Sign in to your preferred provider, such as AWS, Google Cloud, or Azure

- Select the Appropriate Instance Type:

- On AWS, go to EC2 and select instances like P3 or P4, which are powered by NVIDIA Tesla GPUs (e.g., Tesla V100 or A100).

- On Google Cloud, select AI Platform and choose NVIDIA A100 or V100 GPUs.

- On Azure, use N-series Virtual Machines for GPU-based computing.

- Configure the Instance:

- Set the number of GPUs based on your project’s needs (e.g., 1-4 GPUs for smaller tasks, more for larger computations).

- Allocate appropriate memory and CPU resources to ensure the instance runs smoothly

- Install Necessary Libraries: Install TensorFlow, PyTorch, or any other required software. Most platforms offer pre-built images with these tools installed.

- Launch the Instance: Once configured, launch the instance and access it via SSH to begin setting up your research environment.

Optimization Tips for Performance

Maximizing the performance of cloud GPUs ensures faster results and efficient use of resources. Here are some key tips:

Leverage Parallel Processing

GPUs excel in parallel computing, so ensure your algorithms are optimized to utilize the full power of the GPU cores.

Choose the Right Instance Type

- For tasks involving deep learning and machine learning, consider using the NVIDIA V100 for training models that need high performance.

- For even more intensive workloads like climate simulations or molecular dynamics, the NVIDIA A100 provides superior GPU acceleration.

Optimize Algorithms Using CUDA

When running GPU-based computing, it’s essential to optimize your algorithms using CUDA (Compute Unified Device Architecture).

This ensures that your computations are fully accelerated by the GPU cores, reducing processing time significantly.

Common Tools for Cloud GPU Integration

When using Cloud GPUs, researchers need tools that seamlessly integrate with their workflows. Popular tools include:

- TensorFlow: Ideal for AI and machine learning models. TensorFlow supports GPU acceleration out of the box and integrates smoothly with platforms like Google Cloud and AWS.

- PyTorch: Another excellent choice for deep learning tasks, PyTorch is favored for its flexibility and GPU support. PyTorch integrates easily with AWS and Azure GPU instances.

- MATLAB: For scientific simulations and data analysis, MATLAB offers comprehensive support for GPU-based computing, making it a powerful tool for research in fields like bioinformatics and physics.

- R: Researchers in data science often rely on R for statistical computing, and it can leverage cloud GPUs for faster data analysis

How to Minimize Cloud GPU Costs for Long-Term Research Projects?

Pricing Models for Cloud GPUs

Understanding the right pricing model is key to managing costs when using Cloud GPUs for research. Here’s a breakdown of the main models:

- Pay-as-you-go: This model is ideal for short-term projects or when you need flexibility. You only pay for the GPU resources you use, with no long-term commitments. It’s perfect for experiments or small-scale research where demand fluctuates.

- Reserved Instances: For long-term, high-demand research tasks, reserved instances offer substantial savings. By committing to a 1- or 3-year plan, researchers can get up to 70% off compared to pay-as-you-go pricing, making it cost-effective for projects with sustained GPU needs.

- Many cloud providers, including AWS, Google Cloud, and Azure, offer special discounts and grant programs for academic researchers. For example, AWS Cloud Credits for Research provides up to $20,000 in credits for projects that contribute to scientific advancement. Similarly, Google Cloud for Education Grants offers free credits to universities and research labs. Researchers can apply directly through the AWS Research Grants portal, where they must outline the impact and scope of their project

Cost-Saving Tips for Researchers

To further reduce expenses, here are some actionable tips for minimizing Cloud GPU costs:

- Use Spot Instances: For non-critical tasks, consider using spot instances. These are unused cloud computing resources available at a discount of up to 90%. However, they can be interrupted, so they are best suited for workloads that can tolerate occasional downtime.

- Leverage Cloud Storage Efficiently: Storing large datasets in the cloud can add to your overall cost. To avoid extra data transfer fees, store only the essential datasets you need for immediate computation. Additionally, use multi-tiered storage solutions that automatically move less critical data to cheaper storage tiers.

- Optimize Job Scheduling: Plan your GPU usage to avoid idle time. Job scheduling ensures that GPU resources are used efficiently by aligning tasks in a way that maximizes GPU utilization, reducing the overall time spent on cloud services.

Ensuring Data Security and Compliance When Using Cloud GPUs for Research

Data Security Concerns for Sensitive Research

When dealing with sensitive data—such as patient genomes or healthcare records—data security and regulatory compliance are top priorities.

In fields like healthcare and genomics, researchers must ensure that their use of Cloud GPUs complies with laws like HIPAA (Health Insurance Portability and Accountability Act) and GDPR (General Data Protection Regulation). Failing to meet these regulations can result in legal repercussions and data breaches, both of which can severely damage research projects.

Cloud Platforms like AWS, Google Cloud, and Azure offer built-in compliance frameworks to address these concerns. These platforms provide tools for data encryption, access control, and audit logs to help researchers meet necessary regulatory standards.

For instance, AWS supports HIPAA-eligible services, while Google Cloud offers GDPR-compliant solutions for European researchers.

What Are the Best Practices for Securing Sensitive Data with Cloud GPUs?

To maintain strong research data security, here are some best practices to follow when using Cloud GPUs:

- Encryption: Always encrypt your data, both at rest and in transit. Most cloud platforms provide automatic encryption services, but researchers should ensure that encryption protocols (such as AES-256) are enabled to secure sensitive information.

- Multi-Factor Authentication (MFA): Implement multi-factor authentication for all user access to the cloud environment. This adds an extra layer of protection by requiring more than just a password to gain access. Additionally, use role-based access controls to ensure that only authorized personnel can access sensitive research data.

- Regular Security Audits: Perform routine security audits to identify and address any potential vulnerabilities. Cloud platforms like Azure offer integrated tools for monitoring cloud infrastructure, allowing researchers to continuously track the security health of their systems.

Future Trends in Cloud GPUs for Scientific Research

Quantum Computing in the Cloud

Quantum computing represents the next frontier in cloud computing and is set to fundamentally reshape scientific research by solving problems currently out of reach for classical computers. Combining quantum computing with Cloud GPUs will bring unprecedented computational power to fields like materials science, drug discovery, and cryptography.

Quantum computers differ from classical systems by leveraging quantum bits (qubits), allowing them to handle multiple states simultaneously. This makes them ideal for performing advanced simulations, where classical computers struggle due to the sheer number of variables and calculations required.

Top Companies Integrating Quantum Computing

- Google Quantum AI: Google has been at the forefront of quantum computing research. Their Sycamore processor achieved quantum supremacy by solving a problem that classical supercomputers couldn’t match. Google’s integration of quantum computing with Google Cloud GPUs will allow researchers to conduct simulations in areas like molecular biology and quantum chemistry.

- IBM Quantum: IBM’s Qiskit platform is already working with researchers to accelerate simulations in areas such as drug discovery and chemical modeling. By integrating quantum computing with cloud GPU technologies, IBM is enabling real-time simulations at a quantum level, speeding up the discovery of new materials and improving optimization algorithms.

- Microsoft Azure Quantum: Microsoft is developing Azure Quantum, a cloud service combining quantum computing with cloud GPU technologies to provide accessible quantum tools for research institutions. Their work focuses on solving complex optimization and material design problems in partnership with organizations like 1QBit and IonQ.

| Company | Quantum Computing Integration | Applications in Research | Partnerships/Platforms |

|---|---|---|---|

| Google Quantum AI | Achieved quantum supremacy with Sycamore processor | Molecular biology, quantum chemistry | Google Cloud GPUs |

| IBM Quantum | Developed Qiskit platform for real-time quantum simulations | Drug discovery, chemical modeling | Cloud GPU integration with IBM Q |

| Microsoft Azure Quantum | Focuses on complex optimization and material design problems | Materials science, cryptography | Collaborations with 1QBit and IonQ |

The above table provides a side-by-side comparison of how leading companies like Google, IBM, and Microsoft are integrating quantum computing with Cloud GPU technologies. It also outlines specific research applications, such as molecular simulations, drug discovery, and cryptography.

Future Applications of Quantum-Enabled Cloud GPUs

- Materials Science: Quantum computing will allow researchers to simulate molecular interactions at the quantum level, unlocking the design of superconductors, lightweight alloys, and biocompatible materials faster than before.

- Drug Discovery: Quantum-enabled cloud GPUs will help researchers simulate complex chemical reactions in real time, which could significantly speed up the identification of new drug compounds. Companies like Pfizer and Boehringer Ingelheim are already exploring how quantum computing can enhance their drug development processes.

- Cryptography: Quantum computing poses both a challenge and an opportunity in cryptography. It has the potential to break traditional encryption algorithms, but quantum-secure encryption is a field that is rapidly advancing through the integration of quantum computing into cloud systems.

| Research Field | Impact of Quantum Computing with Cloud GPUs | Real-World Example |

| Materials Science | Simulate molecular interactions faster, unlocking new material designs | Development of lightweight alloys and superconductors |

| Drug Discovery | Speed up chemical reaction simulations, identifying drug compounds | Pfizer using quantum computing for drug research |

| Cryptography | Enhance security algorithms or break traditional encryption methods | IBM's focus on quantum-secure encryption |

The above table summarizes how fields like materials science, drug discovery, and cryptography will benefit from quantum advancements, enabling faster simulations and real-time processing of complex tasks.

Future Plans and Expectations

As quantum computing technology matures, its integration with Cloud GPUs is expected to lead to more powerful, quantum-hybrid systems. In the coming years, we can expect research institutions to adopt quantum-enabled Cloud GPUs to conduct real-time, large-scale simulations that were previously impossible with classical computing.

By 2030, quantum-enabled cloud systems will likely become mainstream for high-demand sectors such as pharmaceuticals, aerospace, and energy.

Emerging Cloud GPU Technologies

The release of next-generation GPUs, such as the NVIDIA A100, marks a significant milestone in scientific research, offering faster computation, better parallel processing, and enhanced GPU acceleration.

These GPUs are built for high-performance computing (HPC) tasks like AI-driven research, deep learning, and machine learning, allowing researchers to train models and process large datasets in record time.

One of the most powerful GPUs available today, the NVIDIA A100 provides unmatched performance for data science and AI-driven research. It offers 20x the performance of its predecessor, making it the ideal choice for conducting large-scale scientific simulations in areas like genomics, climate science, and molecular simulations.

Organizations Using cloud-based NVIDIA A100:

- Harvard University: Researchers at Harvard’s SEAS (School of Engineering and Applied Sciences) are using NVIDIA A100 GPUs for climate modeling. By leveraging the A100’s power, they’ve been able to simulate climate change effects in half the time, providing more accurate models of ocean currents, weather patterns, and CO2 absorption.

- Stanford University: Stanford has deployed NVIDIA A100 GPUs for deep learning applications in genomics and cancer research. These GPUs enable researchers to perform real-time DNA analysis on vast patient datasets, speeding up genome sequencing and enabling faster identification of genetic mutations related to cancer.

- Lawrence Livermore National Laboratory (LLNL): LLNL is using cloud-based NVIDIA A100 instances to conduct molecular dynamics simulations for material science research. By combining cloud GPU power with traditional HPC systems, they’ve reduced simulation times by 40%, helping accelerate the design of advanced materials.

| GPU Model | Key Features | Applications in Research | Institutions Using It |

|---|---|---|---|

| NVIDIA A100 | 20x faster than previous models | Deep learning, AI-driven research, genomics | Harvard SEAS, Stanford, Lawrence Livermore Lab |

| NVIDIA Tesla V100 | Optimized for parallel processing tasks | Climate modeling, molecular simulations | Leading AI and climate science labs |

The Emerging GPU Technologies Table compares the performance of NVIDIA A100 and Tesla V100 in different research applications, like AI-driven research, genomics, and climate modeling. It emphasizes how the NVIDIA A100 offers 20x faster performance, making it a game-changer for large-scale simulations.

Future Plans and Expectations:

As more institutions adopt NVIDIA A100 GPUs and other emerging cloud GPU technologies, the speed and efficiency of scientific research will continue to improve. Here’s what researchers and institutions expect:

- Faster Model Training: AI researchers will be able to train deep learning models 50-100% faster with the A100, meaning breakthroughs in fields like AI drug discovery and biotechnology will happen more quickly.

- Greater Accessibility: Cloud providers like AWS and Google Cloud are working to make NVIDIA A100 instances more accessible to universities and research labs through academic grants and pay-as-you-go models, ensuring that smaller institutions can also benefit from this cutting-edge technology.

By adopting new GPU technologies like the NVIDIA A100, researchers are pushing the boundaries of what’s possible in AI-driven research, climate science, and biomedicine. As these technologies evolve, the possibilities for scientific breakthroughs will only continue to grow, positioning cloud GPUs as a critical tool in the future of scientific discovery.

Conclusion

The use of Cloud GPUs in scientific research offers numerous advantages, from cost-efficiency and scalability to enhanced performance in parallel processing. Whether you’re working in genomics, bioinformatics, climate modeling, or machine learning, Cloud GPUs provide the necessary computational power to handle large-scale datasets and accelerate complex simulations. With flexible pricing models like pay-as-you-go and reserved instances, researchers can optimize their resources while keeping costs in check.

Platforms like AWS, Google Cloud, and Azure offer excellent tools for researchers, including NVIDIA Tesla V100 and A100 GPUs for GPU-accelerated computing.

These cloud providers also support integration with tools like TensorFlow, PyTorch, and MATLAB, ensuring a seamless transition into cloud-based research.

If you’re ready to take your research to the next level, now is the time to explore Cloud GPUs. Many platforms offer free trial offers and academic discounts, providing a low-risk opportunity to see firsthand how cloud computing can accelerate your research outcomes. Explore AWS, Google Cloud, and Azure for more details on their offerings, and check out their academic programs to get started on your journey toward faster, more efficient scientific discovery.